Research & Development

Drones In Archaeology

Integrated Data Capture, Processing, and Dissemination in the al-Ula Valley, Saudi Arabia

Published in 2014, by Neil G. Smith, Luca Passone, Said al-Said, Mohamed al-Farhan, and Thomas E. Levy

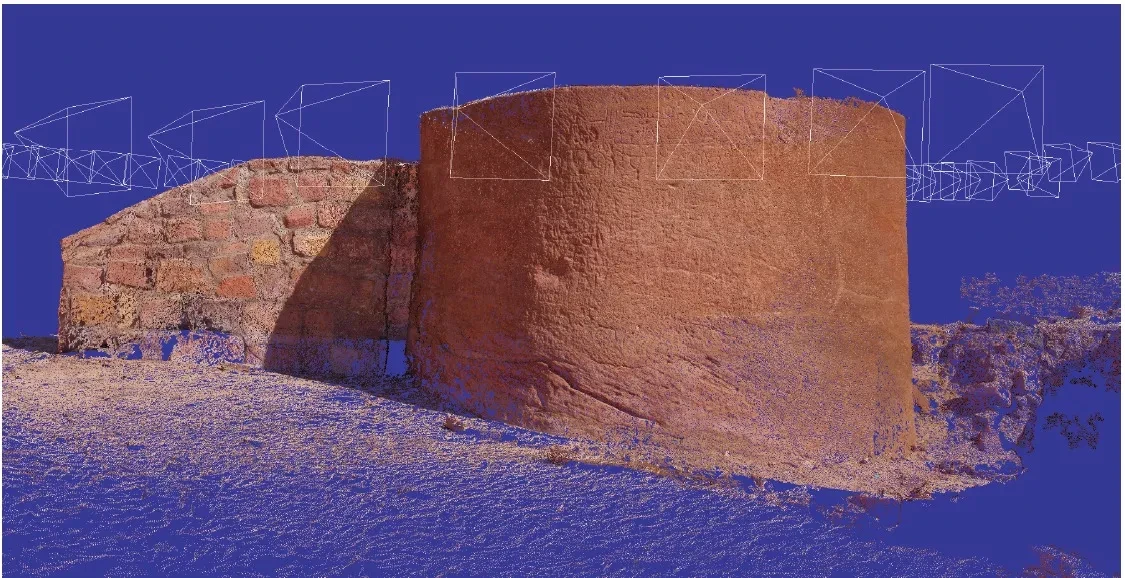

In late 2013, a joint archaeological and computer vision project was initiated to digitally

capture archaeological remains in the al-Ula Valley, Saudi Arabia. The goal of the team of

archeologists and computer scientists was to integrate 3D scanning technologies to produce

3D reconstructions of archaeological sites. Unmanned Aerial Vehicles (UAVs) served as the

vehicle which made this scanning possible.

UAVs allowed the acquisition of 3D data as easily from the air as from the ground. This project

focused on the recent excavations carried out in ancient Dedan by King Saud University and the

country’s conservation of the Lihyanite “lion tombs” carved into the ancient city’s cliff faces.

Over the next several years this site will be used as a test bed to validate the potential of

this emerging technology for rapid cultural heritage documentation.

Additionally, several areas were scanned in Mada’in Saleh, an ancient Nabatean city

filled with monumental carved sandstone tomb facades, rivaled only by the capital of the Nabatean

empire: Petra.

Paper link

Superpixel-based Convolutional Neural Network for Georeferencing the Drone Images

Published in 2021 by Shihang Feng, Luca Passone and Gerard T. Schuster

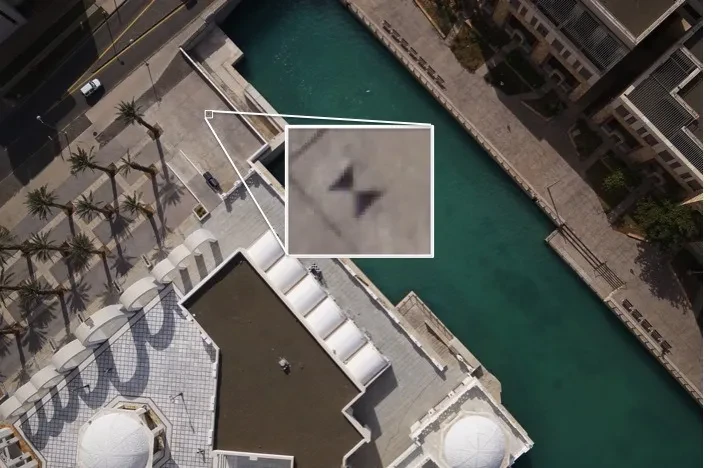

Information extracted from aerial photographs has been used for many practical

applications such as urban planning, forest management, disaster relief, and

climate modeling. In many cases, the labeling of information in aerial photos

is still performed by human experts, resulting in a slow, costly, and error-prone

process. This paper shows how a convolutional neural network can be used to

determine the location of GCPs in aerial photos, which significantly reduces

the amount of human labor in identifying GCP locations.

Two CNN methods, sliding-window CNN with superpixel-level majority voting

and superpixel-based CNN, are evaluated and analyzed. The results of the classification

and segmentation show that both methods can quickly extract the locations of objects

from aerial photographs, but only superpixel-based CNN can unambiguously locate the GCPs.

Paper link

Aerial Path Planning for Urban Scene Reconstruction: A Continuous Optimization Method and Benchmark

Small unmanned aerial vehicles (UAVs) are ideal capturing devices for high-resolution

urban 3D reconstructions using multi-view stereo. Nevertheless, practical considerations,

such as safety, usually limit access to the scan target to a short period of time,

especially in urban environments.

Therefore, it becomes crucial to perform

both view and path planning to minimize flight time while ensuring complete and accurate

reconstructions. In this work, we address the challenge of automatic view and path

planning for UAV-based aerial imaging with the goal of urban reconstruction from

multiview stereo.

Paper link

Sim4CV: A Photo-Realistic Simulator for Computer Vision Applications

We present a photo-realistic training and evaluation simulator (Sim4CV)

(http://www.sim4cv.org)

with extensive applications across various fields of computer vision.

Built on top of Unreal Engine, the simulator integrates full-featured

physics-based cars, unmanned aerial vehicles (UAVs), and animated human

actors in diverse urban and suburban 3D environments. We demonstrate the

versatility of the simulator with two case studies: autonomous UAV-based

tracking of moving objects and autonomous driving using supervised learning.

The simulator fully integrates several state-of-the-art tracking

algorithms with a benchmark evaluation tool and a deep neural network

architecture for training vehicles to drive autonomously. It generates

synthetic photorealistic datasets with automatic ground truth annotations

to easily extend existing real-world datasets and provides extensive

synthetic data variety through its ability to reconfigure synthetic

worlds on the fly using an automatic world generation tool.

Applications Developed in Sim4CV

Teaching UAVs to Race (AI)

Recent work has tackled the problem of autonomous navigation by

imitating a teacher and learning an end-to-end policy, which

directly predicts controls from raw images. However, these

approaches tend to be sensitive to mistakes by the teacher

and do not scale well to other environments or vehicles. To

this end, we propose a modular network architecture that

decouples perception from control. This modular network

architecture is trained using Observational Imitation Learning (OIL),

a novel imitation learning variant that supports online training

and automatic selection of optimal behavior from observing multiple

teachers.

We apply our proposed methodology to the challenging problem of

unmanned aerial vehicle (UAV) racing. We develop a simulator that

enables the generation of large amounts of synthetic training data

(both UAV-captured images and its controls) and allows for online

learning and evaluation. We train a perception network to predict

waypoints from raw image data and a control network to predict UAV

controls from these waypoints using OIL. Our modular network is able

to autonomously fly a UAV through challenging racetracks at high

speeds.

Extensive experiments demonstrate that our trained network outperforms

its teachers, end-to-end baselines, and even human pilots in simulation.

BVLOS Drone Operation On 5G Network

A platform that allows users to control drones remotely through a mobile application running on 5G network.

Functional Requirements

- Allow the user to create a new account.

- Allow the user to sign into their account.

- The system assigns the available drone to the user.

- The user takes control over the selected drone.

- The system enables the user to send control commands to the associated drone.

- The user starts moving the drone as desired.

- The camera starts live streaming the drone footage back to the user.

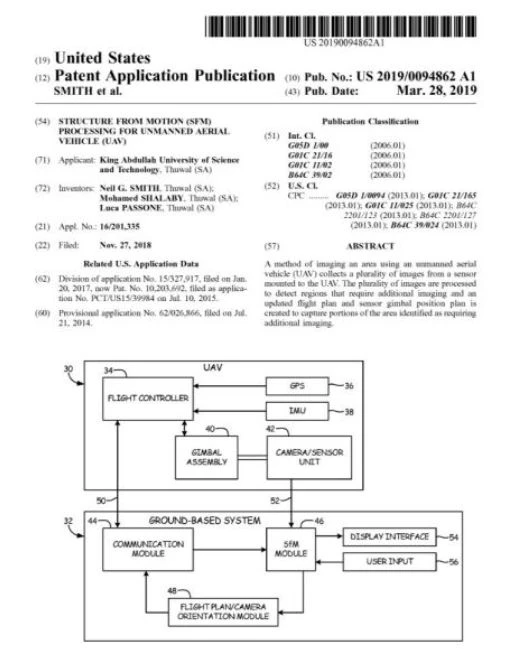

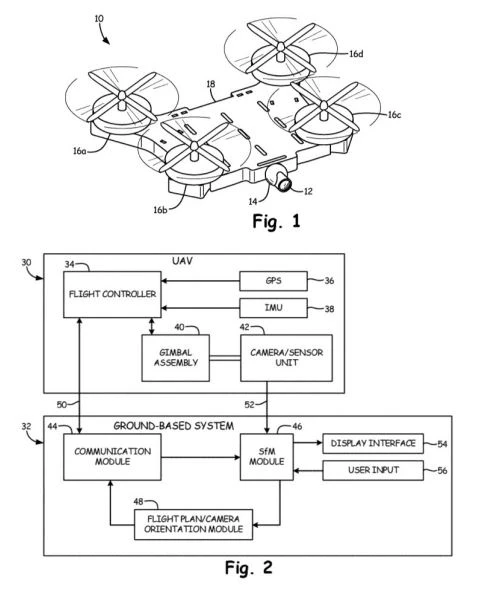

Patents

U.S. Patent Application No. 16/201,335

STRUCTURE FROM MOTION (SfM) PROCESSING FOR UNMANNED AERIAL VEHICLE (UAV)

Publication No.: 2019-0094862 Publication Date: March 28, 2019.